Module 4

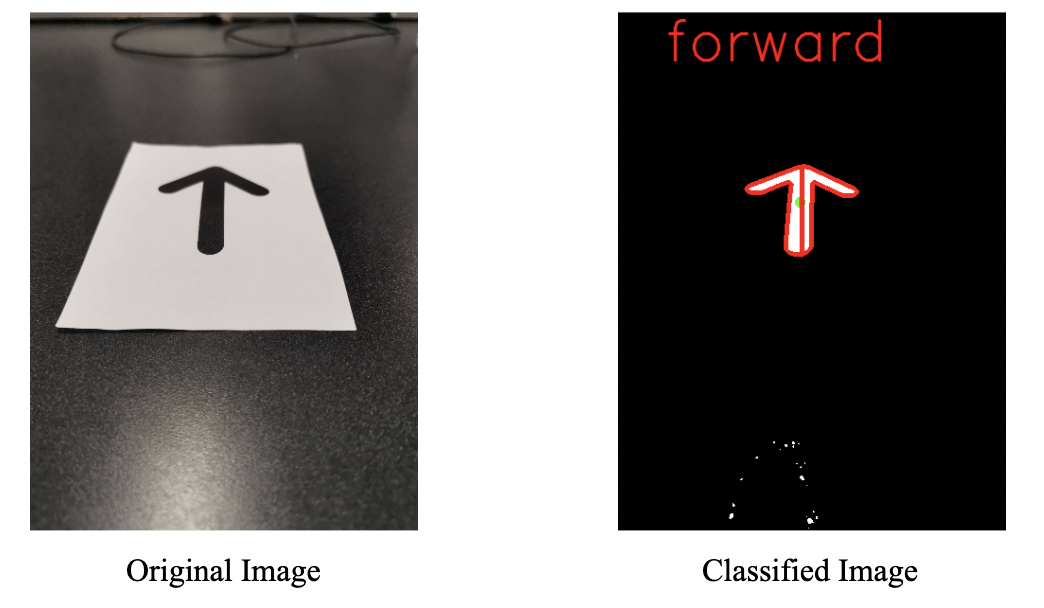

All About Contours // Reading Arrows

Overview

To continue expanding on what we have done so far, we will introduce contours, OpenCV’s even more flexible shape detection method. There is a brief conceptual overview of what contours are in the lecture slides, but the real meat of this module is the skeleton code. You will be walked through how we can use certain characteristics of a contour, like convexity, moment of inertia, center of mass, and more, to distinguish one from the other. The goal will be to preprocess an image containing a symbolic instruction, like a stop sign or an arrow, and utilize OpenCV’s contour methods to recognize that symbol as stop, forward, right, or left.

Lecture Slides

Objectives

By the end of Module 4, you will have used a variety of OpenCV’s contour methods to implement a lengthy function to classify an image as a written instruction.

Skeleton Code

In this Jupyter notebook, you start with some unprocessed .jpg images of a stop sign and some arranged arrows. Using what you learned from Modules 1-3, and a new floodfill function, you can write a function that isolates the black shape by itself.

Then, you will write a larger function consisting of conditional statements that distinguish between a contour’s features, like its centroid, convexity, area, and more. These will enable you to tell the difference between stop, forward, right, and left, and we have also provided a function to help you visualize how your classification scheme is working.

Helpful Tips/FAQ

Checkoff Questions

- How would you find the direction your car should travel if, in addition to the signs we already have, we also had a backwards arrow sign?

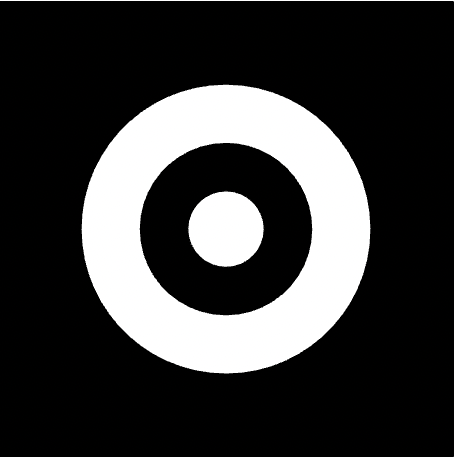

- What would the hierarchy list look like for a bullseye with three concentric circles? Assume the background is distinct from the bullseye’s outermost circle and there is no “noise” in the image.

- Why must we invert our image of the arrows before using OpenCV’s contour functions? (why do we want a black background instead of a white one?)